5 Must-Follow Features That Are Seeing Colossal-AI’s Success

Colossal-AI is an easy-to-use deep learning system that enables users to maximize the efficiency of AI deployments whilst drastically reducing costs. If you would like to learn more about the project, do check out our GitHub repository: https://github.com/hpcaitech/ColossalAI

In celebration of the first official release of Colossal-AI, we describe some of its awesome new features that we believe are responsible for its success:

- A brand new ZeRO module;

- Profiler TensorBoard plugin of Beta version (Finer grained monitoring than PyTorch for communication, memory, etc);

- MOE feature and example;

- Fix bugs and complete tutorials;

- Making Colossal-AI work with HuggingFace;

As well as some of its core features that has made Colossal-AI a popular choice in training and deploying large AI models.

Professional Help with Large Model Training

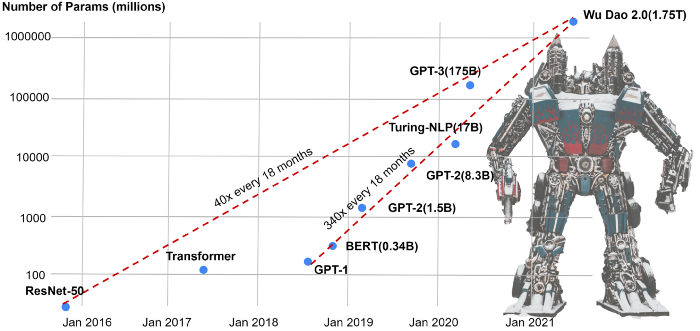

Firstly, it’s important to note that Colossal-AI solves an important problem. In recent years, deep learning has seen an insurgence of large models dominating performance charts. AI models that operate at the frontier have increased ten thousand-fold in just a few years, far outpacing the growth of hardware technologies. Not only are these large AI models far beyond the capacity of a single GPU, but they also often demand hundreds of years of a single GPU.

It thus becomes imperative to improve the capacity of a single GPU via its efficient use to achieve high-performance training of large AI models. This is precisely what Colossal-AI does without burdening the programmer.

Colossal-AI empowers the developer to write performant code for AI deployments through a variety of techniques such as multi-dimensional parallelism and better tools for the maintenance and deployment of models. It allows the programmer to both quickly and efficiently deploy large AI models as well as train them in a distributed manner with only minimal code level modifications. Colossal-AI’s claim to fame are its efficient multi-dimensional parallelism, GPU memory optimization, large-scale optimizer library and fine-grained monitoring.

Let’s dive into some of these key features to see what makes Colossal-AI really great!

[Key Features] Multi-dimensional Parallelism

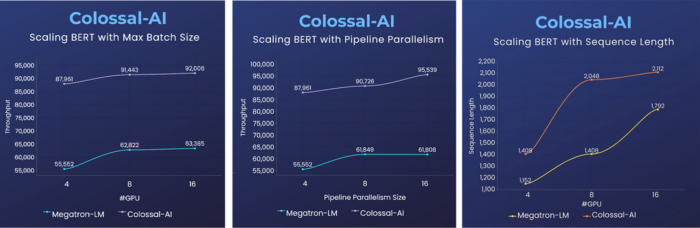

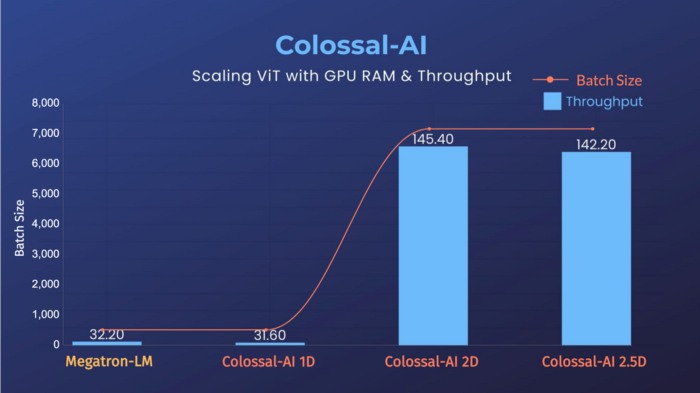

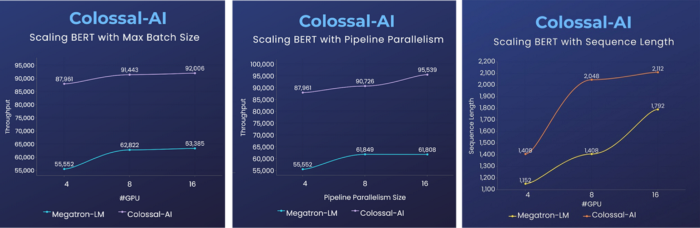

Existing solutions apply limited modes of parallelism in the process of training large scale AI-models, primarily: data parallelism, one-dimensional tensor parallelism and pipeline parallelism. Colossal-AI, however, provides further modes of parallelism, including 2, 2.5, 3-dimensional tensor parallelism as well as sequence parallelism. It offers additional multidimensional hybrid parallel solutions on top of these as well.

Amongst these new parallel techniques, high-dimensional tensor parallelism can greatly reduce memory consumption, improve communication efficiency, and make more efficient use of computing resources.

Colossal-AI even engineers a new form of parallelism: sequence parallelism, which can also be applied to large images, video, long text, long periods of medical surveillance and other data. It enables machines to process long pieces of sequential data that were previously not possible.

[New Features] GPU Memory Optimization

Next up is Colossal-AI’s GPU memory optimization innovations. It combines multiple GPU memory optimization technologies, including multi-dimensional parallelism, ZeRO redundancy memory elimination, CPU offloading, Gradient Checkpointing, Automatic Mixing Accuracy (AMP), etc., to help users avoid memory bottlenecks and to reduce training resource requirements.

[Product Improvement & New Features] Ease of Use

Finally, Colossal-AI prizes simplicity and ease of use. It is designed to be compatible with PyTorch, allowing existing projects to work with minimal modifications. In addition, the system is easily extensible, making it easy to add new features as needed whilst maintaining its performance.

It adds a new fine-grained monitoring tool that allows developers to monitor the network, communication, and memory states within an iteration. Compared to existing frameworks, which can only record the training process with iterations, Colossal-AI makes it easy for developers to accurately analyze and debug deep learning code.

Lastly, Colossal-AI provides a large-scale optimizer library including efficient optimizers like LAMB and LARS, which extend the training batch size to 65536 for the first time. It is also compatible with all of PyTorch’s own optimizers. It is now easier than ever to use large batch optimization methods to train large AI models with Colossal-AI.

[Benefits] Solutions for Industry

This is all great in theory, but what about in practice? Luckily, Colossal-AI has proven its capabilities in its application to hard problems across a variety of industries such as autonomous driving, cloud computing, retail, medicine and chip production. It has also established cooperation with top open source AI organizations such as Hugging Face.

- Helping Drug Research and Development: FastFold

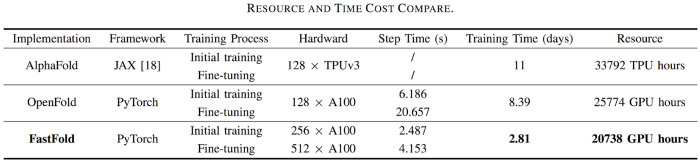

One such monumental application is to the domain of protein folding. Recently, AlphaFold was selected by Science and Nature as one of the top 10 scientific breakthroughs in 2021 for its powerful AI ability to predict protein structure. Nonetheless, it suffers from lengthy training times and high costs.

We applied Colossal-AI to develop an accelerated AI model to predict protein structures: FastFold. It introduces Colossal-AI’s novel GPU optimization and large-model training techniques to AlphaFold training and inference. Fastfold, successfully outperforms the solutions from Google and Columbia University, reducing AlphaFold training time from 11 days to 67 hours at a lower total cost whilst achieving a 9.3~11.6x speedup in long-sequence inference.

- GPT-3 Training with Half of the Machines

For veteran state-of-the art models, Colossal-AI yet again makes headways. For the notorious GPT-3 model, Colossal-AI requires only half the computational resources to start training compared with NVIDIA’s Megatron-LM. This means that if we use the same computational resources, the speedup incurred via Colossal-AI amounts to ~11%, which can reduce the cost to train GPT-3 by over a million dollars!

Your Thoughts?

Colossal-AI will roll out new and innovative features regularly. We always welcome suggestions and discussions from the community, and we would be more than willing to help you if you encounter any issue. You can raise an issue here or create a discussion topic in the forum. Your voice is a perfect tutor to Colossal-AI.

Portal

Code: https://github.com/hpcaitech/ColossalAI

Paper: https://arxiv.org/abs/2110.14883

Tutorial: https://www.colossalai.org/

Join Us

HPC-AI Tech is a global team and the core members are from the University of California, Berkeley, Stanford University, Tsinghua University, Peking University, National University of Singapore, Singapore Nanyang Technological University, and other top universities in the world. Currently, HPC-AI Tech is recruiting full-time/intern AI system/architecture/compiler/network/CUDA/SaaS/k8s core system developers, open source program operators, and sales personnel.

HPC-AI Tech provides highly competitive compensation packages. Our staff can also work remotely. You are also welcome to recommend outstanding talents to HPC-AI Tech. If they successfully join HPC-AI Tech, we will provide you with a recommendation fee of thousands of US dollars.

Resume delivery mailbox: hr@hpcaitech.com

Prof. Yang You, Founder of HPC-AI Tech

Ph.D., University of California, Berkeley

IPDPS/ICPP Best Paper

ACM/IEEE George Michael HPC Fellowship

Forbes Elite Under 30 (Asia 2021)

IEEE-CS Outstanding Newcomer Award in Supercomputing

UC Berkeley EECS Lotfi A. Zadeh Outstanding Graduate Award

Prof. James Demmel, CSO of HPC-AI Tech

Distinguished Professor, University of California, Berkeley

ACM/IEEE Fellow

Member of the American Academy of Sciences, the Academy of Engineering, and the Academy of Arts and Sciences

Funding

HPC-AI Tech raised 4.7 million USD from top VC firms in just 3 months after the company was founded. For more information, please email contact@hpcaitech.com

Comments