Colossal-AI is the best tool for enhancing your deep learning performance and cost-efficiency.

Best Performance

10x

Team Streamlining

10x

Lowest Cost

100x

Easy Using

Multi-system Compatible

Unlimited Expansion

.png)

10x Faster

.png)

100x Size

The capacity of the same hardware model is increased by a hundred times

.png)

1000x GPUs

-

Easy to Use: Native PyTorch compatibility, start with a few lines of code.

-

Comprehensive Solutions: Integration and optimisation of the latest cutting-edge technologies.

.png)

Large Language Models

.png)

AI for Science

Biomedical

.png)

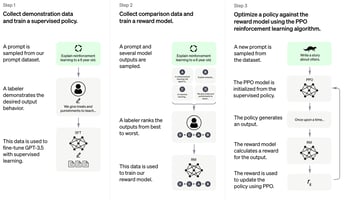

ChatGPT

.png)

SFT & CPT

Fine-tuning model with only half a day on a $1000 budget, with results comparable to mainstream large models.

End-to-end delivery from data collection preparation to inference deployment.

.png)

Pretraining from Scratch

.png)

Enterprise-wide Knowledge Base

Open Sora

The world's first open-source Sora-like architecture video generation model and complete low-cost solution

Scalable Clusters

Flexible Terms

Multiple Clusters

Instant Launch

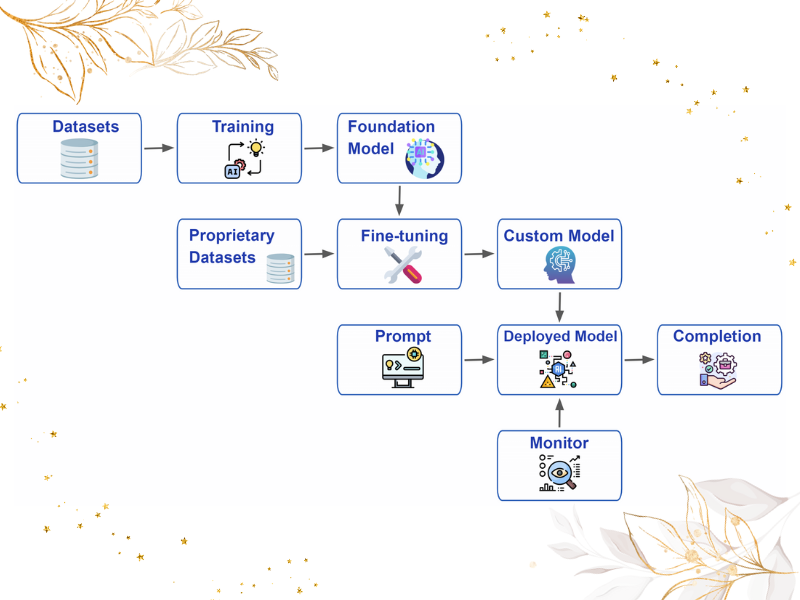

Full process coverage of large model development and deployment applications, including data collection preparation, model training/fine-tuning, inference deployment, end-to-end delivery

Software and hardware all-in-one full stack resources; Pay-as-you-go, no long-term commitment

Colossal-AI Software Stack Optimisation Adaptation

10X performance acceleration, 100X cost savings

Maximise resource utilisation, minimise large model costs

AI large model training/fine-tuning/inference/model building

One-click management/development/application of AI large models with zero/low code

Low cost auto elasticity scaling

Colossal-AI is trusted by leading AI teams worldwide

Colossal-AI Case Study

- Provide computing power supply and integrated software and hardware solutions for many industries, universities, AI companies, etc.

- Cooperated with many of the world's leading technology giants in industry, academia and research, and won outstanding papers at top conferences such as AAAI and ACL.

A Fortune 500 company

- Developed multi-modal Agent, improving multi-task performance by 114%

- Optimize multi-modal reasoning performance

A Fortune 500 company

- Provide AI large model software infrastructure for emerging hardware

- Optimized for emerging hardware performance and price-performance by 30%

A Fortune 500 company

- Pre-train a privatized large model with hundreds of billions of parameters on the Qianka cluster

- Optimize multi-modal reasoning performance 8 times

A Chinese Fortune 500

- RLHF fine-tunes a large model of privatization with hundreds of billions of parameters

- Optimize ChatGPT PPO speed 10 times

A Chinese Fortune 500

- Research and develop cutting-edge fields such as autonomous driving algorithms, multi-modal fusion, and knowledge distillation

- Optimize and improve algorithm, model training and inference speed

A Southeast Asian technology giant

- Build high-quality, multi-lingual ChatGPT-like enterprise customer service at low cost

- Optimize large language model inference speed by 13 times

One of the top three AI R&D institutions in Asia

- Improve the inference speed of large language models by 30%

- Jointly explore the cutting-edge technology of AI large models

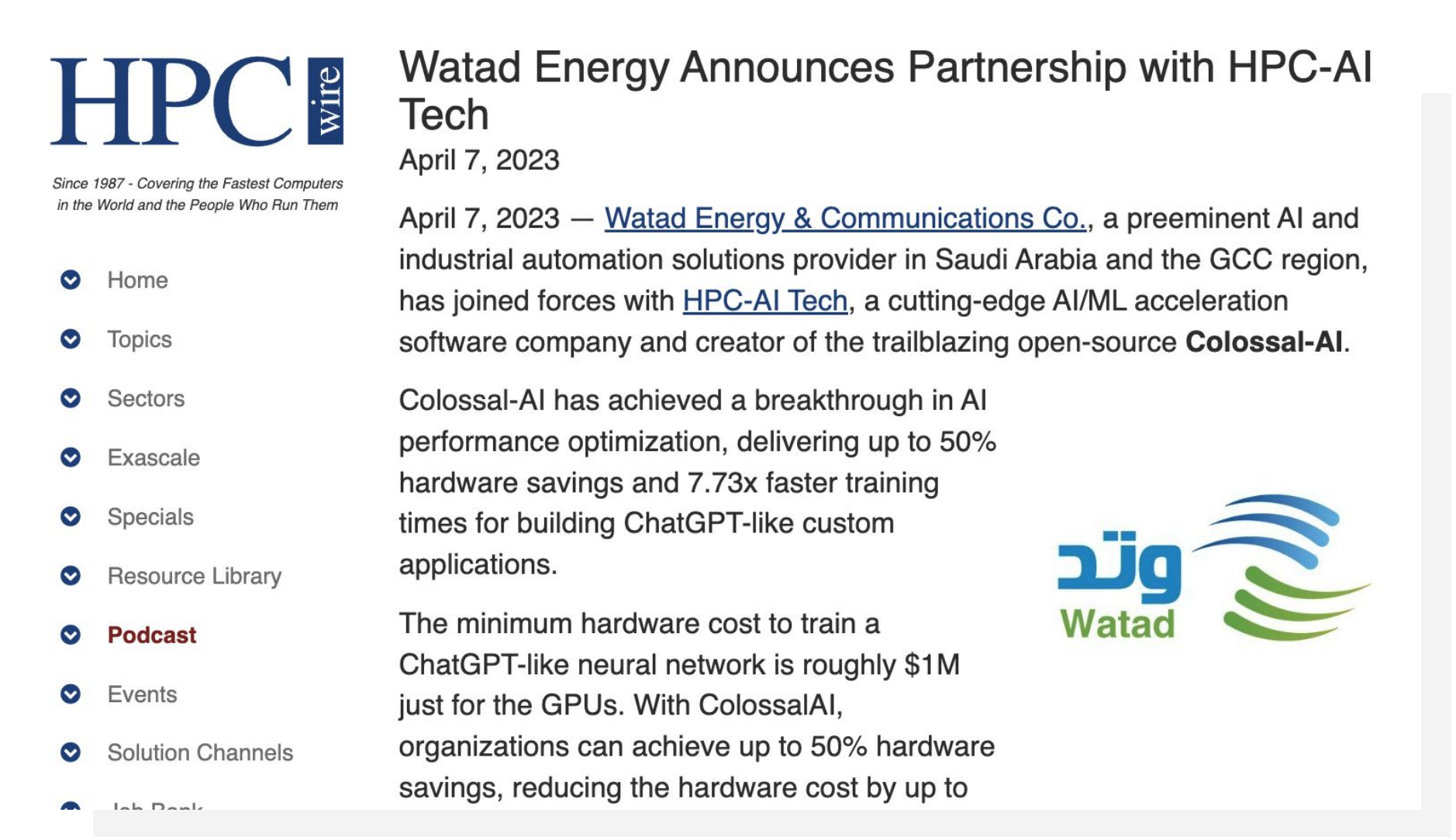

A Middle Eastern energy company

- Developing a ChatGPT-like model for Arabic + energy industry

- Build high-quality AI large models at low cost

A computing power center

- Provide privatized AI large model software infrastructure for AI intelligent computing centers

- Joint operation of computing power marketing and AI solutions

A certain Chinese leading media

- Develop a ChatGPT-like model for the Chinese + media industry

- Build high-quality AI large models at low cost

A medical unicorn

- Provide optimization solutions for protein prediction models for the biopharmaceutical industry

- Improved AlphaFold2-like model training/inference by 11 times

An Internet unicorn

- Low-cost and high-quality construction of a ChatGPT-like model with unified multi-role IP

- Provide privatized training and pushing all-in-one machine solutions

Community Recognition

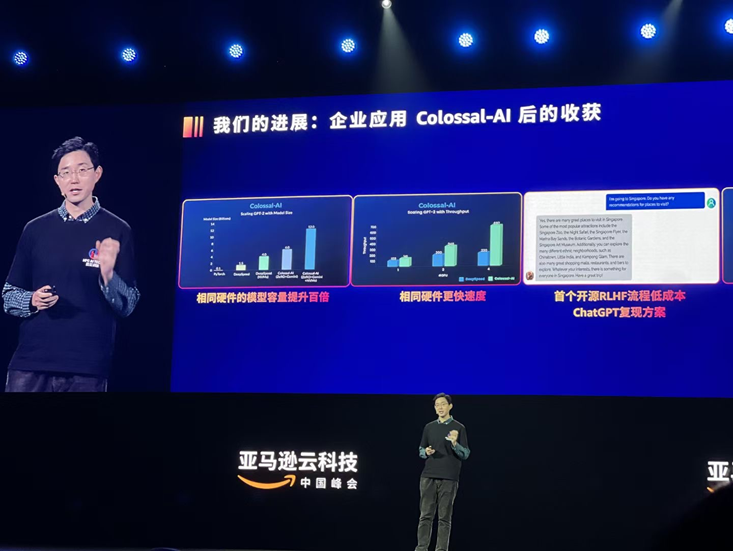

The only start-up representative at AWS China Summit

International Conference on Machine Learning

Reported by HPC Wire, the world’s number one supercomputing media

Obtained official admission to top international AI and high-performance computing conferences/events

Loved by 36,000+ Global Users

-1.png)

Top blog posts

Replicate ChatGPT Training Quickly and Affordable with Open Source Colossal-AI

February 14, 2023

Diffusion Pretraining and Hardware Fine-Tuning Can Be Almost 7X Cheaper! Colossal-AI's Open Source Solution Accelerates AIGC at a Low Cost

.png)

%20(3).png?width=1600&height=400&name=High-performance%20and%20low-cost%20implementations%20Validated%20performance%2c%20easy-to-use%20solutions%20Technical%20support%20and%20expertized%20assistance%20(1600%20x%20900%20%E5%83%8F%E7%B4%A0)%20(3).png)